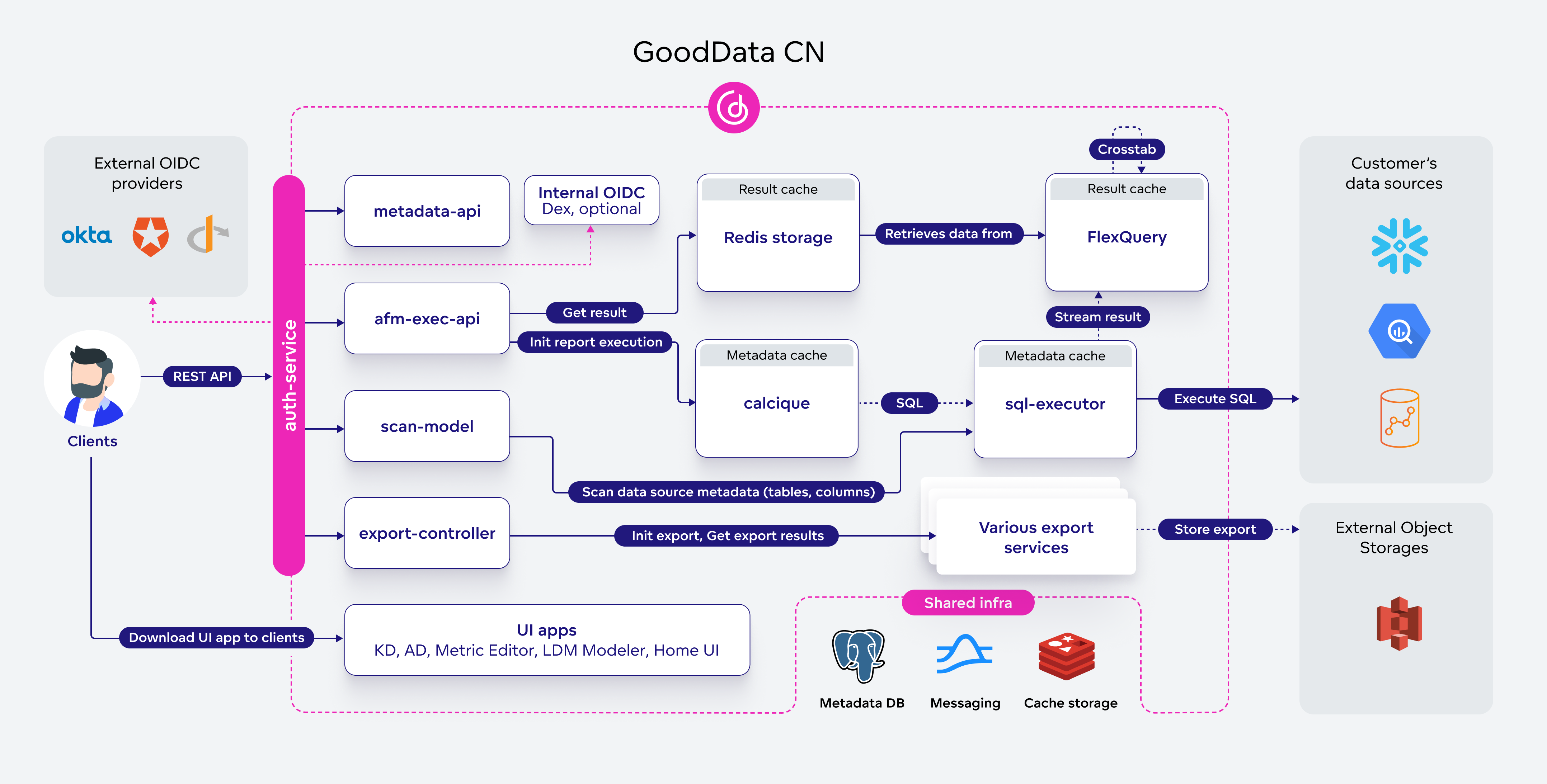

Deployment Architecture

This article provides an overview of GoodData.CN’s microservice architecture and the flow of a report computation request.

Microservices

GoodData.CN is primarily composed of microservices that communicate synchronously through gRPC and asynchronously via Apache Pulsar. External communication with GoodData.CN is possible through the public REST API.

Most of these microservices are built using Kotlin (JVM) with the Spring Boot framework, while others are based on Python.

Authorization Service

This service handles authentication-related use cases (based on OAuth 2.0) through the OAuth2 Library. The service also provides user context, containing basic information about the organization, authenticated user, and more. This context is useful for all API clients.

GoodData.CN stores session data (Access and ID tokens) as encrypted HTTP cookies to enhance security.

Metadata Service

This service manages all GoodData.CN metadata, such as report and metric definitions, and exposes a public REST API for managing it. Metadata is stored in a PostgreSQL database.

The Calcique Service and SQL Executor cache some metadata. Therefore, the Metadata Service must notify these services about specific metadata changes asynchronously through Apache Pulsar.

For real-time metadata requests, dedicated gRPC calls are used.

AFM Service

The AFM (Attributes, Filters, and Metrics, formerly called measures) Service serves as the gateway for report computation and related use cases. It allows REST clients to define report executions more easily than by creating complex metrics. The service handles the following use cases:

- Report execution: Computation of Reports

- Valid objects: Returns compatible Logical Data Model (LDM) entities (attributes, facts, metrics) based on the current context (AFM).

- Label elements: Returns label values (distinct values in the mapped table column).

The service utilizes the MAQL parser, collects relevant metadata from the Metadata Service, and transforms AFM requests into MAQL ASTs. These ASTs can be used by the Calcique Service to generate corresponding SQL statements.

Scan Model Service

This service forwards requests to the SQL Executor Service, which communicates exclusively with the data source). Once the SQL Executor Service retrieves all data source metadata, it returns the result to the Scan Model Service. This service then uses the metadata to generate an LDM based on naming convention parameters. The Scan Model Service is also responsible for testing connectivity to data sources.

Calcique Service

The Calcique Service is the core component of GoodData.CN. Built on the Apache Calcite project, it provides features for manipulating SQL trees and generating valid SQL statements for all supported data sources.

Inputs for this service include MAQL AST, LDM. The Calcique Service retrieves these inputs from the Metadata Service and caches them (cache invalidation is done through Apache Pulsar). The inputs are then used to generate optimized query trees, which are sent via Apache Pulsar to the SQL Executor Service.

Result Cache Service

The service receives results from the SQL Executor Service (streamed through gRPC) and stores them as raw results under rawId. Afterward, a process called cross-tabulation begins, which is responsible for pivoting, sorting, and pagination (dividing results into multiple pages). Once the cross-tabulation is completed, the results are stored under resultId in FlexQuery.

Once the service has fully utilized it’s filestorage (1GB by default) all new caches trigger last recently used caches eviction to keep filestorage under it’s limit.

Redis is used to store metadata about the cached data in FlexQuery.

SQL Executor Service

This service is responsible for executing queries, retrieving results, storing results in the cache, managing database connection pooling, scanning database metadata (schemas, tables, columns, constraints), and testing data source configuration correctness.

For JDBC, HikariCP is used, and for BigQuery, the Google Cloud BigQuery Client for Java is employed.

Export Services

Services responsible for exporting visualizations as PDF, XLSV and CSV files.

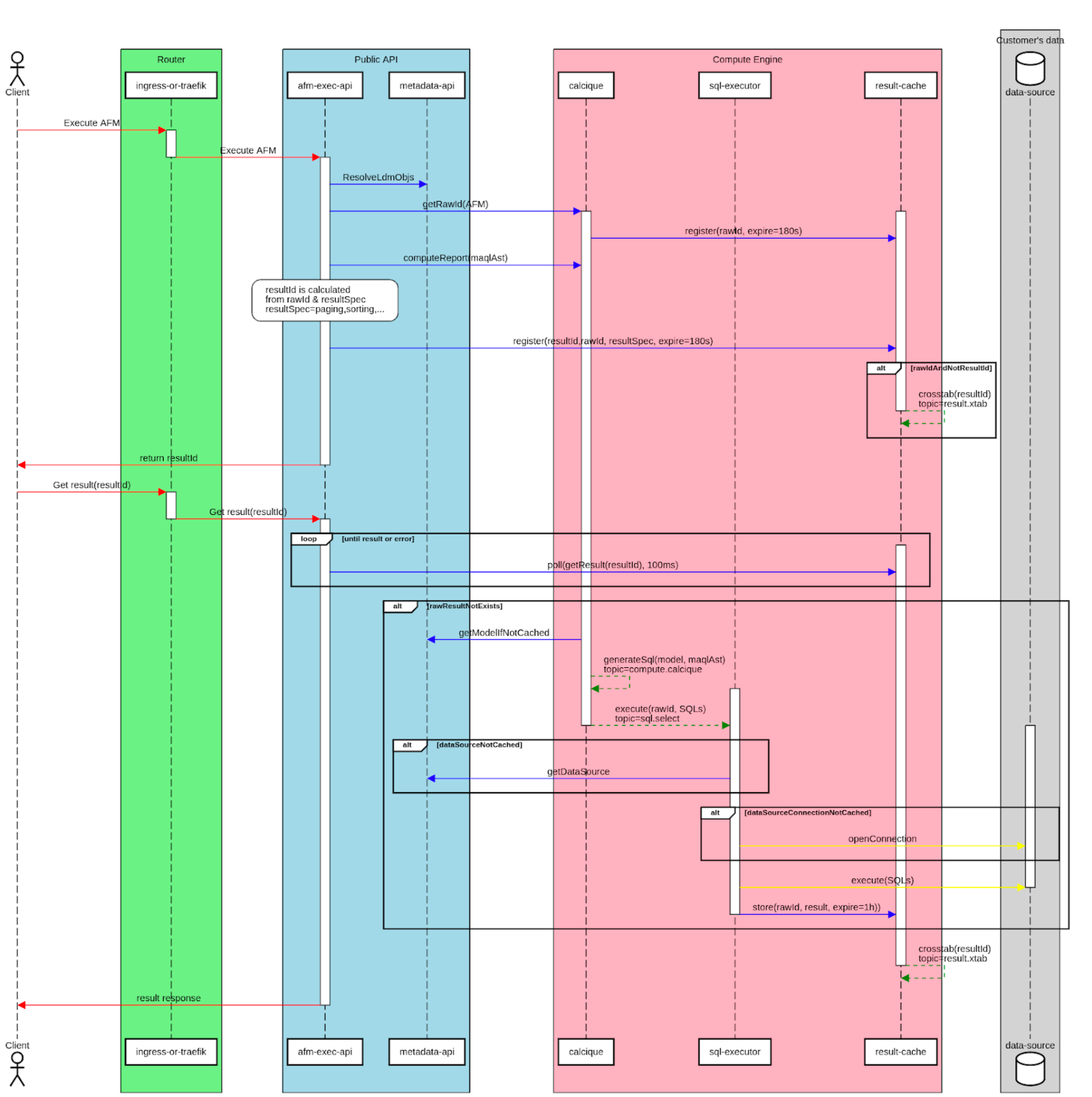

Report Computation Request

Report computation is a common operation in GoodData.CN. The following text and flow diagram illustrate how a report computation request is processed.

The entire flow consists of:

- A POST request to

/api/v1/actions/workspaces/{workspaceId}/execution/afm/executewith an AFM (Attributes, Filters, and Measures) in the request body. The response includes a resultId, which is predictably generated. This means that repeated requests for the same report will utilize the result cache and avoid additional computations on the data source. - The AFM body is translated into MAQL.

- Using the LDM (logical data model), object identifiers (facts, metrics, attributes, etc.) are resolved in MAQL. The Calcique Service can then generate corresponding SQL statements.

- SQL statements are executed against the data source, and raw results are stored in the cache under rawId.

- Crosstabulation is applied, and the final results are stored in the cache under

resultId. - A GET request to

/api/v1/actions/workspaces/{workspaceId}/execution/afm/execute/result/{resultId}retrieves the result.

UI Applications

This service includes all GoodData UI applications: